As advances in agent technology accelerate, a wider conversation is emerging about how much autonomy these systems should truly be given — and how we’ll actually know when they’re competent enough to handle the hard, unpredictable work that business and life demand. In practical terms, that means we need reliable ways to evaluate how well these digital workers can solve real-world problems, at scale, without causing chaos.

“We're heading toward a world where anyone can be a CEO,” says Mike Merrill, a machine learning scientist and postdoctoral researcher at Stanford’s Department of Computer Science. “Projects won’t be limited by labor — they will be limited by creativity. But for that world to happen, we need to have confidence in our agents' abilities to execute autonomously and safely.”

To address this, Merrill is co-leading a new project called Terminal-Bench, an evaluation and benchmarking suite that stress-tests AI’s true technical prowess.

For context, humans and AI agents can interact with machines through graphical user interfaces (GUIs), such as web browsers and desktop applications. Alternatively, they can channel through the command-line interface (CLI) — a terminal-based environment that offers greater flexibility, granularity, and control. CLIs rely on raw text and typed commands, and they’re not for the novice: With minimal safety nets, a single mistyped instruction can nuke an entire database.

Written by

Paul Sawers

Photography by

Chloe Aftel

Design by

Jennifer DeVoid

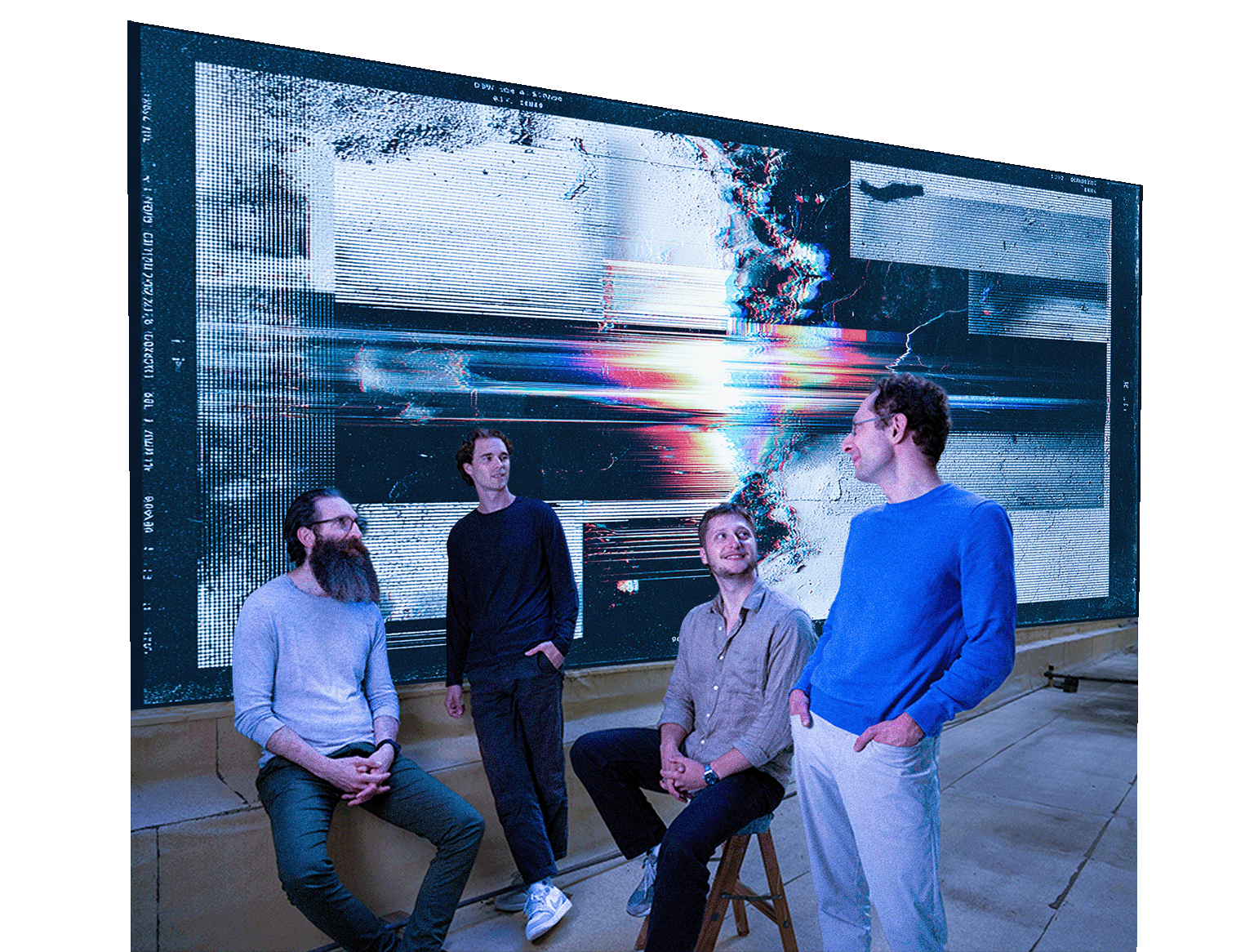

Terminal-Bench is a collaborative, open-source project backed by a crack team of AI researchers, engineers, and entrepreneurs. This includes Databricks and Perplexity co-founder Andy Konwinski, who recently stood up Laude Institute to help top computer science researchers transition their work into real-world applications, and Ludwig Schmidt, assistant professor of computer science at Stanford University, who advises on the project alongside Konwinski.

With Terminal-Bench, Merrill builds on a body of his own research that includes developing methods and datasets for training and evaluating language models, with industry experience through research and internship roles at Apple and Google. Co-leading the project alongside Merrill is Alex Shaw, who left a software engineering role at Google, where he was working on conversion modeling for ad recommendations, to become a founding member of technical staff at Laude Institute.

The Team

Andy Konwinski

advisor

Ludwig Schmidt

advisor

Mike Merrill

co-creator

Alex Shaw

co-creator

Shaw, who committed the first code to Terminal-Bench in January 2025, says terminals are ideal environments for large language models (LLMs) and AI agents, but first, we must build trust.

“The terminal is just a text-based way of controlling a computer — in fact, the earliest computers were just terminals,” Shaw said. “And language models excel at text-based tasks, so building Terminal-Bench is essentially about creating a computer-use benchmark.”

While terminal environments like the Linux command-line have long been central to human-computer interaction, such interfaces are becoming the standard for AI coding agents, too, such as Anthropic’s Claude Code, OpenAI’s Codex, Block’s Goose, and Anysphere's Cursor. But current AI agents aren’t able to exploit terminals to their full potential, getting stuck trying to execute multi-step command sequences, or becoming overly sure of themselves.

“I think the degree of flexibility [in the terminal] can be challenging — it’s powerful, but that also means so many ways for things to go wrong. You can delete every file on your computer very easily,” Merrill said.

Taskmasters

Terminal-Bench provides the tools and data to help quantify an agent’s command-line competence — chaining commands, interpreting feedback, and interacting independently. It does this through tasks, a curated set of challenges that simulate real-world workflows in secure, containerized environments. Each task includes a plain-English description of what needs to be accomplished (e.g., “implement a MIPS interpreter in JavaScript to run a provided ELF binary”), and a corresponding solution and test-script to verify that the agent completed the task correctly.

“The good thing about Terminal-Bench is that there's almost no limit to how difficult you can make a task,” Shaw said. “We have an initial set of tasks, but we're going to make more, and we can make them harder by requiring more interactions and longer time horizons.”

Terminal-Bench has some hundreds available at the time of writing, spanning software engineering, scientific workflows, security, system administration, model training, and more. Some tasks are created internally by the Terminal-Bench team, but as an open-source project, it encourages contributions from the wider community of researchers and developers — this includes the likes of esteemed AI safety researcher Nicholas Carlini, who joined Anthropic from DeepMind in early 2025, and who has contributed more than 10% of the tasks to date. Elsewhere, the folks at data-focused AI company Snorkel AI recently announced their collaboration on Terminal-Bench 2.0, contributing new tasks and helping refine the benchmark’s evaluation methodology.

Community contributions are integral, as they open things up to some of the world’s top engineers and researchers who have encountered all manner of technical challenges through their careers.

“Because they’re made by members of the community, we believe that they're very realistic tasks that someone would actually need to do,” Merrill said. “But we have a very high bar in terms of tasks we actually merge into the main repository. We spend a lot of time working with contributors to make sure that their tasks meet our standards.”

From Idea to Industry-Standard Eval

Konwinski & Schmidt talk CLI benchmarking at NeurIPS

Dec 11 2024

First T-Bench team meeting at Stanford

Jan 16 2025

Initial commit by Shaw

Jan 17

Merrill’s first commit

Jan 20

Repo is open-sourced

Mar 16

First commit by Carlini

Mar 23

First customer call

(OpenHands)

Apr 17

First power user (Claude Code)

May 8

Terminal-Bench launches

May 19

Anthropic C4 release cites Terminal-Bench

May 22

Launched Terminal-Bench registry

July 16

Terminal-Bench 2.0 & Harbor released

Nov 7

Just days after Terminal-Bench was presented to the world in May 2025, Anthropic CEO Dario Amodei took to the stage at the inaugural Code with Claude developer conference in San Francisco, where he introduced the company’s latest suite of LLMs. In his opening gambit for the flagship Claude Opus 4 model, which is optimized for complex, long-running coding tasks and deep reasoning, Amodei name-checked two specific benchmarks.

“Let's talk about Opus,” Amodei said. “It is especially designed for coding and agentic tasks, [and] it gets state-of-the-art on SWE-Bench [and] Terminal-Bench…”

This mention, while fleeting, served as a major boost for Terminal-Bench in its quest to become an AI benchmarking standard, much like SWE-Bench before it. Introduced in October 2023, SWE-Bench is a popular benchmark for evaluating AI coding agents, but rather than assessing actions in the command-line, SWE-Bench is all about editing code and fixing bugs in GitHub. Both benchmarks are important, though, as they test complementary skill sets essential for agents to function as fully capable engineers.

At the same time, the field has grown more exacting. K Prize, a “contamination-free” follow-up to SWE-Bench by Konwinski (and the project that brought Shaw onto the Laude Institute founding team), was built to expose how well models can handle problems they’ve never encountered. To prevent data leakage, its test set is assembled only after the competition begins, using fresh GitHub issues collected post-training. The result? A first-round leaderboard topped out at just 7.5% — a humbling reminder of how far coding agents still have to go.

“The first step in getting something to work well is being able to measure it effectively,” Merrill said. “Part of the success that we've seen with coding agents this past year has been because of SWE-Bench — it created a very difficult benchmark to hill-climb on. We want to see that happen with agents in the command-line.”

As with sibling benchmarks, Terminal-Bench has a leaderboard, which includes a full list of the top-performing agents. Developers are free to benchmark their agents in private, but to be featured on the leaderboard, they need to submit their logs to Terminal-Bench.

Tasks are difficult, and deliberately so. In the weeks following its launch, the top-performing agent on Terminal-Bench was Anthropic’s Claude Code, with an accuracy rating of just 43.2%. To the layperson, this might have seemed low — but this is actually a good sign from an evaluation perspective, as it means the benchmark will hold value well into the future.

In the intervening months, Terminal-Bench has evolved into a proving ground for the world’s most capable AI agents from the likes of OpenAI, Warp, Factory, and Block. As of early November 2025, Anthropic’s various Claude-brand models are stealing the show, constituting 16 of the top 20 top-performing agents. Leading the pack is Apex2, an agent developed by TianJian Wang built on Claude 4.5 Sonnet, with an accuracy of 64.5%. Just behind is Ante, a Claude-powered agent from Antigma Labs that has attained a score of 60.3%.

But while the agents’ command-line competence is on the up, the scores indicate they are still far from masters at complex, open-ended terminal workflows.

“The funny thing is, we would almost prefer that number to be lower — it would be a more positive signal,” Shaw added. “I think people like really hard benchmarks right now. If current models were scoring, say, 90% — next year's model scoring 95% wouldn’t seem that much better. Whereas at 60%, this shows that there's still so much room for growth.”

So if next year’s top coding agents are scoring, say, 70% on Terminal-Bench, followed by 80% and 90% in subsequent years, this demonstrates that the models truly have improved.

But perhaps over and above all that, this trend also highlights an important shift: Performing well on Terminal-Bench isn’t just a function of having the best foundation model, it’s about the agent layer on top. Many of the top submissions use custom shells, memory managers, or process orchestrators that let them interact more robustly with the command line. These engineering choices, rather than the underlying model alone, are driving the current performance frontier.

Meeting of Minds

The genesis for the Terminal-Bench project itself was sparked by Konwinski and Schmidt, who met up at NeurIPS, a machine learning and AI conference, in December 2024, with members from their respective teams to discuss projects to collaborate on. As it turned out, they were already thinking along similar lines.

“I met Ludwig around four years ago and made some mental notes, as he was a special kind of guy,” Konwinski said. “And at NeurIPS, we pitched each other our ideas, and they were essentially the same — the ‘terminal’ as one tool to rule them all, and a benchmark that uses the terminal.”

Schmidt’s research centers on the foundations of machine learning, including AI evaluations. Among this work is ImageNetV2, a follow-on to the landmark ImageNet dataset which became the standard for training and benchmarking computer vision models. While ImageNetV2 was similar in scope to its predecessor, Schmidt’s team found that many models performed worse on ImageNetV2, highlighting potential “overfitting” to the original benchmark. It revealed that some models may not truly “understand” concepts, much like a student who crams for a test, but who is then unable to generalize their knowledge.

While ImageNetV2 and Terminal-Bench focus on very different domains, there are valuable lessons to be drawn from the former.

“I learned how important it is for this field to have good benchmarks, because you can guide model development with these benchmarks,” Schmidt said. “Because of ImageNetV2, I also learned that building a good benchmark just takes a lot of time. You need to set up the tasks well, do a lot of quality assurance to make sure the grading of the agent — or the image-classifier — is correct.”

Terminal-Bench also presents a new challenge in terms of designing robust tasks. With ImageNetV2, it was a case of gathering thousands of images, labeling them, and testing the model against the labeled images. Designing a process for constructing tasks in Terminal-Bench is more challenging, relying substantively on specialized, expert knowledge. And this is partly why Terminal-Bench needs to be an open, collaborative project.

“One of the reasons why we built this community around Terminal-Bench was that we really need to crowdsource experts who know AI and computer science really well, in order to build good tasks for Terminal-Bench,” Schmidt said. “I like it when benchmarks are aligned with what people will eventually want the models to be used for.”

This approach has been pivotal to much of Schmidt’s academic work, including OpenCLIP, an open-source reproduction of OpenAI’s CLIP (Contrastive Language-Image Pretraining) which connects images and text to understand an image based on natural language descriptions, rather than fixed labels.

"Open source, for me, is just an extension of doing science in the open — if we think about how science works, a lot of it is people reading others’ papers, building on each other's ideas," Schmidt said. "When we do open-source AI, it’s because we think it's important to share all of these artifacts, and make it possible for more people to contribute so they can train their own models.”

And for Terminal-Bench, its open-source credentials have helped it secure a faster adoption rate because users can self-serve and contribute with minimal friction.

“We decided to put everything out in the open because we want to move as quickly as possible,” Merrill said.“ We don't want this delay of agent-makers building their agent or model, and having to come to us to evaluate it.”

The Laude Giveth

Terminal-Bench represents a close collaboration between Laude Institute and leading Stanford researchers, setting the precedent as the very first project in Laude’s Slingshots program — an initiative built to turn promising AI research into tangible, high-impact artifacts. Other projects in the inaugural Slingshots batch are similarly high-caliber efforts from top-tier researchers, from foundational benchmarks like ARC-AGI-3 and CodeClash, to agent frameworks (SkyRL, rLLM, SGLang), optimization tools (DSPy, GEPA), and major applied efforts in biomedicine (Biomni) and enterprise (BizBench).

Laude Institute itself is purpose-built to accelerate the real-world impact of computer science research, providing fast grants, hands-on support, and the connective tissue needed for projects to move from research to deployment. The organization is anchored by a $100M pledge from Konwinski (who is also a co-founder of the independent venture capital firm, Laude Ventures), and counts some of the world’s top computer scientists and AI researchers among its close community (Jeff Dean, Dave Patterson, and Joelle Pineau serve on the board, with Matei Zaharia, Ion Stoica, Chris Ré, Thomas Wolf, Ali Ghodsi, and Yejin Choi serving as research advisors).

“At Laude, we’re looking for the top 0.1% of researchers, and we look for researchers who ship — we call them ‘impact researchers’,” said Konwinski, whose own journey as computer scientist and founder heavily informed the playbook behind Laude’s approach to helping researchers ship their work. Konwinski’s trajectory runs from co-creating Apache Spark during his Berkeley days to co-founding Databricks and Perplexity. Suffice to say, he knows a thing or two about shipping research and building businesses, a track record that serves as the blueprint for Slingshots.

There is plenty of important work going on across all the top universities today, some of which may not bear fruit for 10 or 20 years — maybe longer (if at all). With Slingshots, Laude Institute is targeting a corner of academia seeking a payoff closer to the five-year time frame. Such a payoff could mean creating an impactful open-source project (“the next Apache Spark or Linux”) adopted by researchers and developers globally. Or, it could mean translating research into a startup, an outcome that Laude is well-positioned to support. The Institute can provide grants, infrastructure, engineering, technical guidance, marketing, and more, with Laude Ventures stepping in if the research matures into a product befitting a business.

But while the nature of the final payoff isn’t fixed, two key aspects of Laude’s approach are: Qualifying projects should follow an entirely “open” ethos, with AI a central ingredient.

“Laude Institute is ‘open-everything’ — open model, open weights, open source, open discourse, open publications,” Konwinski said. “Our bets are going to be in areas like reasoning models, open-source models, and small models — things that academics can be competitive at by taking big swings at interdisciplinary research.”

And this gets to the crux of what Laude is striving for: It’s fostering a community of top research minds and providing the support to give fledgling projects the best chance of succeeding.

“We think of Laude as an organization that gives the right resources, to the right researchers, at the right time,” Konwinski said.

“Due to Andy's mentorship and framing of impact research as analogous to a startup, we spent a ton of time thinking about usability, ease of adoption, and meeting with potential customers — something academic projects rarely do,” said Shaw. “Laude’s emphasis on usability and GTM really led us to really agonize over the details of how the benchmark can be easily adopted and tasks easily created — specifically by creating a simple but flexible framework, tooling to help speed up task creation and quality evaluation, great documentation, a beautiful task gallery to market the benchmark, and creating simple ways to install and run agents in like ~20 lines of code.”

The road to 2.0: Measuring success

Some six months on from its formal unveiling, the Terminal-Bench team is in the midst of rolling out version 2.0 — a major upgrade described by Shaw as “bigger, higher-quality, and harder” than its predecessor.

“We originally released Terminal-Bench 1.0 as a ‘beta’ dataset to get early feedback — we didn’t know it would be adopted so quickly,” Shaw explained. “Looking back, there are so many things we knew we could improve about the benchmark. In some ways, Terminal-Bench 2.0 is closer to our original vision for the benchmark and demonstrates Terminal-Bench's ability to express a diverse set of tasks.”

And so version 2.0 ushers in refined, more-relevant tasks (removing early “game-style” tests) and demonstrates the benchmark’s ability to represent a broader diversity of real-world command-line challenges. Alongside this, the team is also unveiling a new open-source framework dubbed Harbor, which generalizes the Terminal-Bench evaluation environment for use in agent research and training.

“Harbor is the culmination of all of our lessons learned from creating Terminal-Bench,“ Shaw said. “It accounts for the diversity of use cases and is more modular with well-defined interfaces to support things like cloud deployments and running at scale.”

This all builds on other recent updates, such as the Terminal-Bench Dataset Registry introduced in July that enables external benchmarks such as SWE-Bench to be integrated and evaluated through the same standardized framework.

Together, these upgrades reflect the project’s rapid maturation and adoption across the industry, with some 18 distinct agent organizations now appearing on the Terminal-Bench leaderboard. And this speed of movement from idea to industry standard has, to some degree, caught the folks at Laude a smidgen off-guard.

“It was so unexpected — we knew we had built a great benchmark that we were proud of, but even we were surprised by the level of interest and adoption,” Shaw said. “We hope that having a common framework that drastically simplifies using and creating agentic evaluations will 10 or 100x the number of evals being created and accelerate model and agent development.”

With Terminal-Bench still in its infancy, its future remains fluid — a script yet to be executed. Defining what “success” looks like will depend on what’s being measured, whether that’s birthing a commercial business, or cementing its position as the de facto standard for measuring agents’ command-line competence.

But if AI agents were to gain true terminal mastery, the ramifications could be profound: complex engineering tasks entirely automated, increasing efficiency across vast infrastructure and codebases. If the project delivers on its promise — to quantify, and ultimately accelerate, real-world agent skills — then the days when terminal mastery belonged to a handful of engineers may be numbered.

“There’s a tendency right now to think of the terminal as ‘just another tool’ that agents have amongst hundreds of other tools,” Shaw said. “People don't realize how high-leverage getting good at the terminal would be. We hope that Terminal-Bench will illustrate to people how important the terminal is, and we hope its ability to quantify agents' ability in the terminal will lead to quicker improvement.”

Slingshots // One

Laude Institute’s very first Slingshot, Terminal-Bench keeps good company in the inaugural batch alongside projects like DSPy, GEPA, SkyRL, CodeClash, ARC-AGI-3, and more.If you’re building the next benchmark, dataset, or model that could shape the future, we want to help you do it.

Contributors

Explore